How can executive performance dashboards support child welfare agency effectiveness?

FEBRUARY 3, 2021

LESSONS FROM OTHER FIELDS

How can executive performance dashboards support child welfare agency effectiveness?

By Lynda Blancato, project leader, and Scott Kleiman, managing director, Harvard Kennedy School Government Performance Lab*

Performance dashboards can provide child welfare agency leaders visibility into operational and outcome trends throughout the system, allowing them to identify red flags and intervene quickly before problems become more serious, as well as elevate and spread effective practices that are working well. Where a potential response to declining or stagnant performance is not readily apparent, executive dashboards also equip leaders to engage in follow-up discussions with staff in order to further diagnose challenges and implement solutions. However, two common design problems — that agency directors are not looking at the most useful data, and data are presented in ways that makes it difficult to uncover decision-shaping insights — make it difficult for leaders to use these dashboards to improve results.

This strategy brief details a framework and approach for developing executive dashboards for leaders of public child welfare agencies, including strategies for presenting data in a way that enable leaders to respond to performance trends in real time. The executive dashboard metrics that Michigan’s Children’s Services Agency developed using this approach is featured in the appendix to this brief.

For more detailed information on the application of this approach in a jurisdiction, see the companion brief, How did Michigan’s Children’s Services Agency develop and implement executive performance dashboards?

Typical dashboards impede effective decision-making

Child welfare leaders interact with data about the performance of their system dozens of times per week. Most have internal performance dashboards that they review in regular meetings with their senior leadership teams, yet many find it difficult to go beyond compliance-focused reporting to where they use data to shape decisions, track progress toward goals, and focus agency attention on concerning trends that may require additional support.

There are two common design challenges that impede child welfare leaders from effectively using performance dashboards to improve results:

- Agency executives are not looking at the most useful data. Some leaders review dashboards with narrow measures to review — often focused on compliance requirements or the crisis of the moment — that limit visibility into additional areas of the system. Other agencies have dashboards that present duplicative information across similar charts, resulting in time spent interpreting redundant trends rather than discussing operational changes that could improve performance. Agencies often also include metrics of limited value for managing operations, such as the number of media reports or legislative inquiries. Other topics — such as racial disparities — are treated as one-off analyses instead of areas to be tracked regularly over time.

- Data are presented in ways that make it difficult to uncover decision-shaping insights. Even when the right data are selected, choices in how these data are visualized influence whether leaders can draw meaningful insights that shape their decisions. Data presented with little context or disaggregation are time-consuming to interpret. Dashboards that include overly technical metrics, such as “odds ratios,” can be challenging for use by leaders without a background in data analysis. It is also common for executive dashboards to include metrics required for federal reporting, legislative mandates, or litigation-related agreements, which require complicated calculations aggregated across multiple sources, making it difficult to attribute trends to specific challenges or changes in agency practice.1

Identifying and prioritizing data to include in executive dashboards

Agency leaders are faced with nearly unlimited numbers of potential metrics to prioritize for high-frequency attention and review. Without a structured approach for developing a set of executive dashboards, leaders risk being inundated by data or potentially overlooking a key aspect of the system (especially one that has usually performed well in the past).

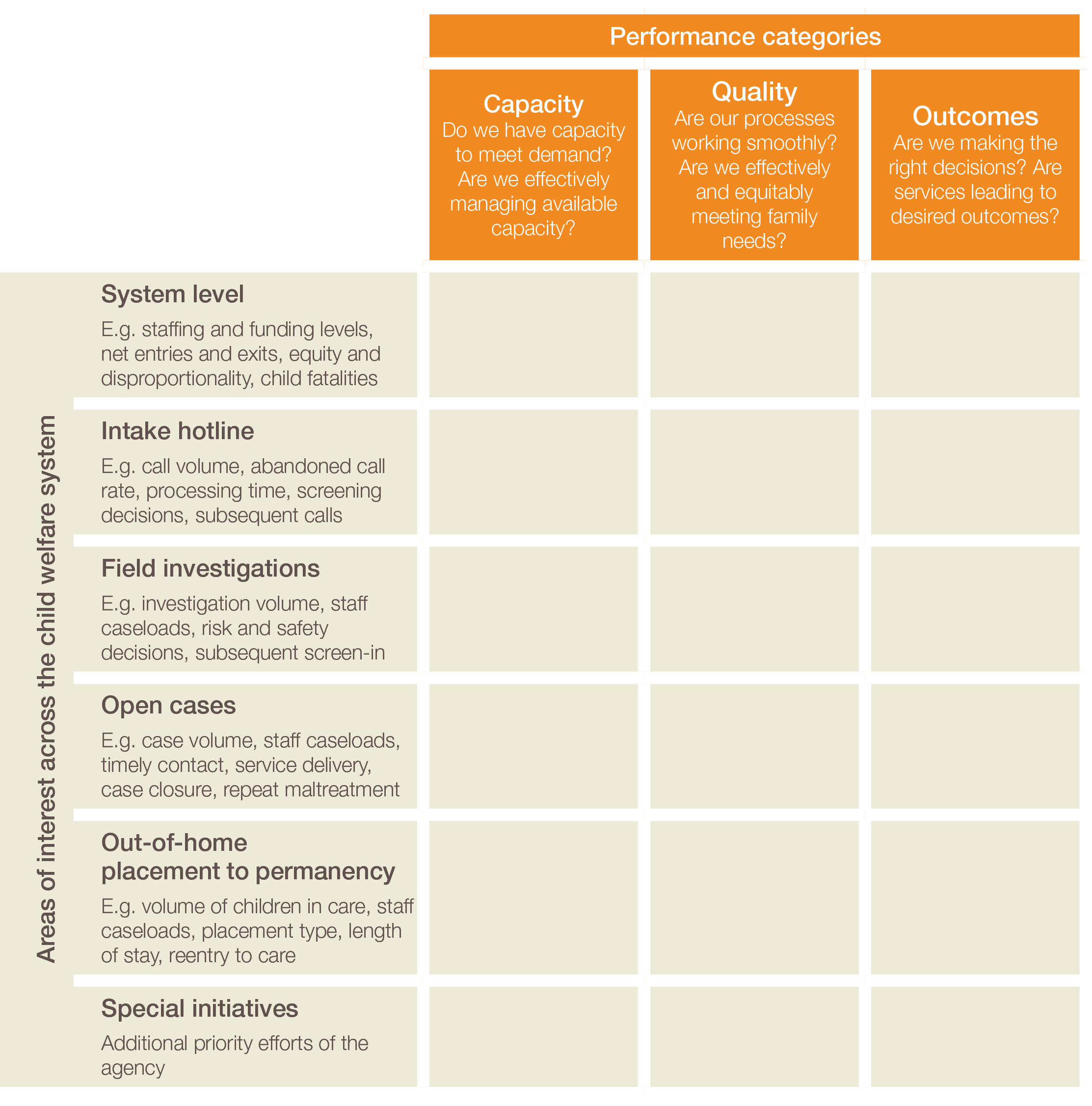

Nearly all important agency performance questions fall into one of three categories: system capacity, program quality, or child and family outcomes:

- System Capacity: Does the agency have sufficient resources in place to meet the demand and need for services? Is the agency effectively utilizing and distributing available resources across the jurisdiction?

- Program Quality: Are agency programs and processes functioning efficiently and effectively? Are services being delivered in high-quality ways that meet child and family needs? Are services effectively reducing racial disproportionality and disparities in the system?

- Child and Family Outcomes: Is the agency making the right decisions about cases as they progress through the child welfare system? Are the agency’s interventions successful in keeping children safe and improving outcomes for families?

For each major area of the child welfare system — for example, intake hotline, field investigations, open cases, and out-of-home placements — tracking a small number of metrics in each of these three categories can offer leaders a comprehensive line of sight across system operations and outcomes. Leaders can also use these three dimensions of performance to establish dashboard metrics about system-wide trends (such as child maltreatment fatalities or net entries-to-exits) and other priority areas of attention. See the diagram on the next page for an illustration of this framework.

To apply this approach, agency leadership begins by brainstorming key management questions or issue areas related to capacity, quality, and outcome indicators for each major area of the child welfare system. In developing this list, the leadership team will want to consider common challenges as well as what information is needed to make operational decisions. For example, when considering outcome indicators for the intake hotline, a key question might be “Is the agency screening in reports that potentially should have been screened out?”

Next, after generating a comprehensive list of questions for each major area of the child welfare system, it is important to prioritize for inclusion in the final dashboards the most important two to four questions or issues within each performance category (capacity, quality, outcomes). Often this involves choosing a mix of questions that can be early indicators of a problem, as well as questions oriented toward retrospectively monitoring progress.

After the most important management questions have been identified, agencies can design metrics that address each one. For example, a related metric for the intake hotline might be the share of screened-in allegations where the investigation finds no evidence of child abuse or neglect. In designing each metric, agency leaders should consider indicators that take advantage of existing administrative data resources and will aid agency leaders in diagnosing the most likely challenges that may emerge. It can also be helpful to pick metrics that agency leaders are already familiar with interpreting.

The last stage of the process involves working with the agency’s research or quality-improvement team to identify data sources for each of the dashboards, design the presentation for each chart, and build protocols for generating the dashboards at high-frequency intervals.

The resulting set of metrics will equip agency teams with a combination of leading indicators to detect early warning signs of system distress as well as lagging indicators to better understand whether the system is successfully achieving desired results. This kind of structured process for prioritizing metrics can also be helpful even if a jurisdiction categorizes areas of performance in differently than described in the diagram.

The executive dashboard metrics that Michigan’s Children’s Services Agency developed using this approach is featured in the appendix to this brief.

Presenting data to aid interpretation and prompt follow-up action

For performance dashboards to help agency leaders generate operational changes that improve results, agencies must present metrics with enough context that agency leaders can uncover actionable insights and determine follow-up strategies.

There are five dashboard design elements that can position agency leaders to more effectively respond to performance trends in real time:

- A long time horizon that shows performance trends and seasonal variation over time, ideally presenting monthly intervals going back two or more years. This enables leaders to respond quickly when concerning trends emerge and monitor how follow-up interventions deliver the intended improvements.

- A target benchmark or reference line that allows leaders to contextualize performance and determine the urgency of possible reforms. For example, an agency may have a target caseload for investigators, and interpreting trends in the context of deviation from this target will help determine the strength of performance or need for intervention.

- Disaggregation by operationally meaningful subunits — such as geographic region, race/ethnicity, child age, or case characteristics — aids leaders in identifying areas with stronger practices to spread and areas with lower performance that may need additional support. For example, by breaking out performance by region for a metric such as the frequency of face-to-face contacts with children in open cases, an agency may uncover a weaker performing region driving statewide trends and be able to intervene early to provide additional supports. Additionally, aggregated data presented as an average hides outliers; instead, it is often better to show data in ways that let leaders see the frequency of the worst results that may need immediate attention and the best results that may offer opportunities to learn about effective practices to spread.

- Solutions-focused discussion questions and guidance for interpreting trends, which help to jump start and focus discussion so that agency leaders can swiftly turn their attention to identifying possible operational changes to address concerning trends or stagnant performance. As executive dashboards often provide a high-level picture of agency performance, drilling down further to understand individual county or field-unit performance, or conduct selected case reviews may provide greater insight regarding potential operational changes to improve performance.

- Explanation for why strong performance on each measure matters for client outcomes, which prompts leaders to consider trends in the context of child safety, permanency, and well-being. This facilitates the design of solutions focused on the opportunities that will matter most for children and their families.

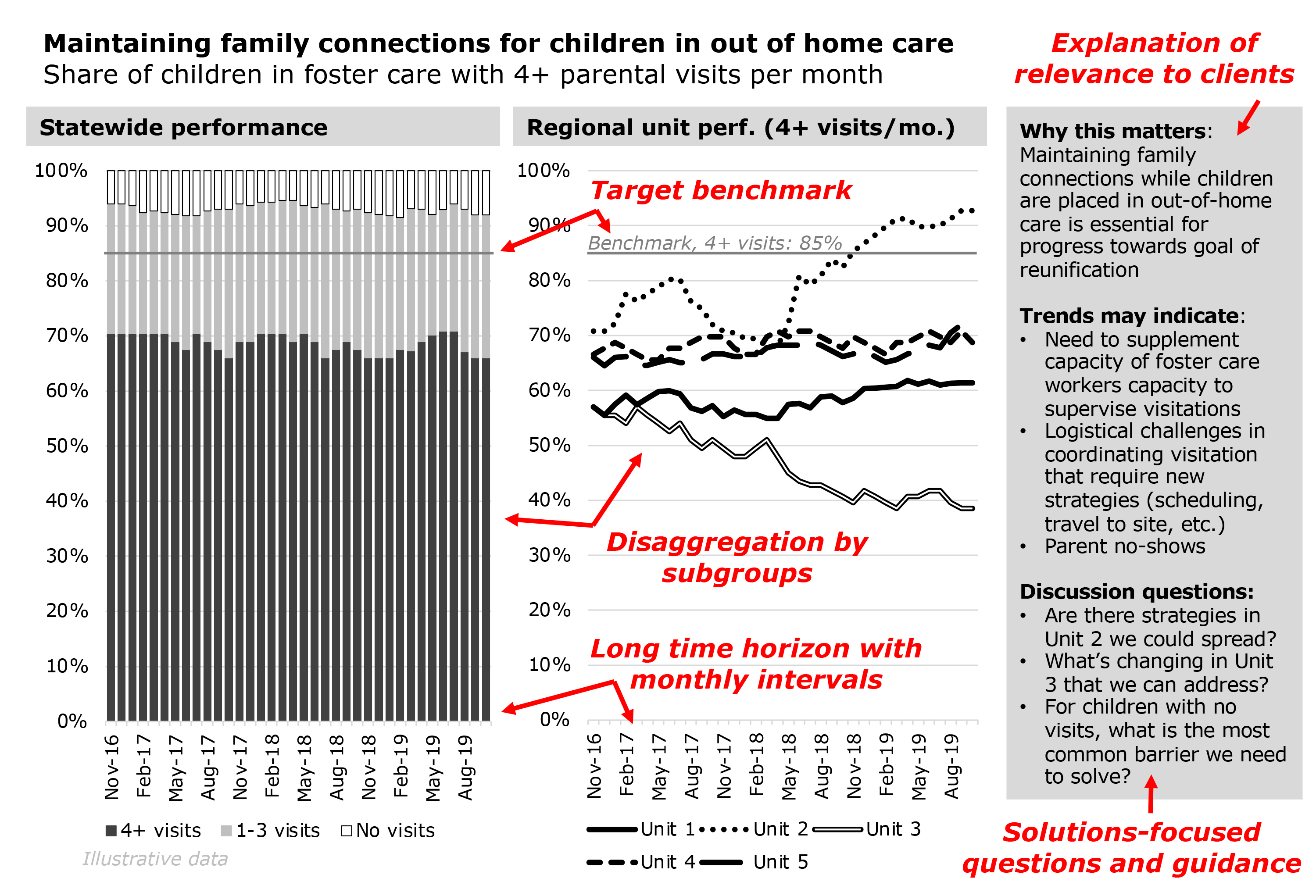

Below is an illustrative child welfare executive dashboard showing the frequency with which children in out-of-home care visit with their parents. The chart on the left shows the share of children statewide by the number of family visits they have each month for the last three years. The chart on the right shows the share of children in each regional unit with a clear target (four or more visits each month) during the same period.

Sample child welfare executive dashboard illustrating five key design elements

This chart features the five design elements described above.

First, it shows the monthly trend over the past three years, so agency staff can easily tell if there have been improvements, declines, or steady performance in the share of children with the target number of family visits; having three years of data also enables leaders to quickly assess how seasonal variation may be influencing results.

Second, it shows the performance relative to a benchmark of 85% of children receiving four or more family visits so that leaders can easily determine how urgently reforms may be needed.

Third, it disaggregates the data both by the share of children with four or more, one to three, and no visits per month (left) and the system’s five regional units (right), so that leaders can notice the share of children with the worst outcomes that may require urgent attention (those with no visits) and notice the regional unit with the strongest performance (Unit 2) and look to them for potential best practices to spread.

Fourth, the dashboard provides guidance to help leaders interpret possible implications of the trends and suggests solutions-focused discussion questions for consideration by senior leadership.

Fifth, it reminds leaders that higher frequencies of family visits often are associated with enabling children in foster care to more quickly return home.

Appendix: Executive dashboards from Michigan’s Children’s Services Agency

Below is the full set of performance dashboard metrics that Michigan’s Children’s Services Agency developed for monitoring its system operations and outcomes. Each dashboard measure is connected to a key management question that the agency seeks to answer through available data.

The resources below may be useful for agency leaders working to develop or refine executive performance dashboards tailored to the priorities and needs of their own jurisdictions.

SYSTEM LEVEL

| Key Question | Related Measure | |

|---|---|---|

| Capacity | 1. Do we have sufficient staff capacity to effectively manage the needs of our system? | Count of supervisors, field investigators, foster care case managers, other case carrying staff, centralized intake specialists, staff in training, vacant positions |

| 2. Are we effectively allocating and deploying available funding? | For current fiscal year, comparison of allocated budget versus actual expenditures by month | |

| Quality | 3. Is our reporting system functioning effectively? | Ratio of reports of maltreatment to total number of serious child injuries in Medicaid billing records |

| 4. Are we effectively reducing disproportionality and disparities in outcomes across our system? | Comparison by race/ethnicity of child: overall child population of state, share of screened-in reports, share of substantiated maltreatment, share entering out-of-home care, share achieving permanency within 12 months, share in care 24+ months | |

| 5. Are we reducing entries into out-of-home care and supporting children to exit care? | Net entries to exits for out-of-home care | |

| Outcomes | 6. Are we effectively reducing the occurrence of child fatalities and near fatalities? | Among all child fatalities and near fatalities attributed to maltreatment, share with prior interaction with child welfare system AND Count of all child fatalities from non-natural causes |

CENTRALIZED INTAKE

| Key Question | Related Measure | |

|---|---|---|

| Capacity | 1. Do we have sufficient staff capacity to effectively manage the needs of our system? | Count of supervisors, field investigators, foster care case managers, other case carrying staff, centralized intake specialists, staff in training, vacant positions |

| 2. Do we have sufficient staff capacity to manage the volume of contacts? | Ratio of total contacts processed to intake workers; among all calls presented, share of calls abandoned | |

| Quality | 3. Are we processing contacts efficiently? | Share of contacts processed with <1 hour, 1-3 hours, 3-5 hours, or 5+ hours between receipt of contact and screening decision |

| 4. Are we making consistent screening decisions? | Among all reports of child maltreatment, share of reports screened in for investigation | |

| 5. Are we screening out reports that may have benefited from being screened in? | Among reports that were screened out, share of families with subsequent contact to centralized intake / screen-in within following 3 months | |

| Outcomes | 6. Are we screening in reports that potentially should have been screened out? | Among all investigations, share resulting in a Category V disposition AND Number of reconsideration requests by outcome |

FIELD INVESTIGATIONS

| Key Question | Related Measure | |

|---|---|---|

| Capacity | 1. Is the volume of investigations straining the capacity of our system? | Count of active and overdue investigations |

| 2. Do we have sufficient staff capacity to manage the volume of investigations? | Share of staff with 11 or fewer investigations, 12 investigations, 13-14 investigations, 15+ investigations | |

| Quality | 3. Are we making face-to-face contact with alleged victims in a timely way? | Share of alleged victims with face-to-face contact within priority timeframes (24 or 72 hours) |

| 4. Are we making consistent decisions regarding the level of identified risk at investigation closure? | Share of investigations with Category V, IV, III, II, and I dispositions | |

| 5. Are we making consistent decisions to open ongoing cases or remove children to out-of-home settings? | Share of Category I cases with out-of-home placements; share of Category III cases opening to CPS | |

| Outcomes | 6. Are we closing cases that may have benefited from having services put in place? | Among investigations that did not open to CPS, share with subsequent contact to centralized intake / screen-in within 3 months |

ONGOING CASES

| Key Question | Related Measure | |

|---|---|---|

| Capacity | 1. Is the volume of cases straining the capacity of our system? | Count of children in open cases who are in-home, out-of-home |

| 2. Do we have sufficient staff capacity to manage the volume of ongoing cases? | Share of staff with caseloads of 16 or fewer families, 17 families, 18-19 families, 20+ families | |

| 3. Do we have sufficient service capacity to meet the needs of families? | Number of families on waitlist by program type | |

| Quality | 4. Are we making face-to-face contact with children on a monthly basis? | Share of children with face-to-face visit in last 30 days |

| 5. Are we making face-to-face contact with parents and caregivers on a monthly basis? | Share of primary caregivers / parents with goal of reunification with face-to-face visit in last 30 days | |

| 6. Are we regularly updating service plans to meet family needs and improve time to case closure? | Share of families with updated service plans / family team meetings within last 90 days | |

| Outcomes | 7. Are we successfully providing supports that lower risk and keep children safe in-home? | Count of cases escalating to Category I or II |

| 8. Are we effectively supporting families to care for their children and promote child safety and wellbeing? | Share of Category III cases closing within 90 days; share of Category I and II ongoing in-home cases closing within 6 months, 12 months | |

| 9. Are we effectively reducing the occurrence of repeat maltreatment? | Share of children with substantiated subsequent maltreatment within 1 month, 6 months, 12 months of case closure |

OUT-OF-HOME PLACEMENT

| Key Question | Related Measure | |

|---|---|---|

| Capacity | 1. Is the volume of out-of-home placements straining the capacity of our system? | Count of children in out-of-home care by placement type (kinship care-licensed, kinship care-unlicensed, foster care, residential, independent living, shelter, other) AND Share of children in each placement setting by race/ethnicity |

| 2. Do we have sufficient staff capacity to support the volume of out-of-home placements? | Share of foster care workers with caseloads of ≤14, 15, 16-17, or 18+ children; share of state and private worker caseloads meeting target | |

| 3. Do we have enough available beds to meet the need for out-of-home care? | Utilization of available beds in residential, state foster care, private agency foster care | |

| Quality | 4. Are we successfully placing children in appropriate placements? | Count of sibling groups placed separately; count of children under 12 placed in residential / shelter; share of children placed out of county |

| 5. Are we supporting the placement stability of children in out-of-home care? | Share of children experiencing a placement disruption within 30 days of entering a new placement | |

| 6. Are we maintaining family connections for children placed out-of-home? | In cases with goal of reunification, share of children with visitation with their parents never / twice / four times in past month | |

| 7. Are we bringing youth who run away back into care quickly? | Count of runaway youth by length of time on runaway status (0-7 days, 8-30 days, 31+ days) | |

| 8. Are we making sure children do not linger in foster care? | Number of children in out-of-home care by length of stay (0-11 months, 12-23 months, 24-35 months, 36+ months) | |

| 9. Are we matching children who have adoption goals to adoptive families in a timely way? | Count of children waiting for adoption by adoption status (matched to family, waiting for family) | |

| 10. Are we supporting families to successfully navigate the challenges of reunification? | Count of families enrolling and persisting in after care following reunification | |

| 11. Are we effectively emancipating youth for successful transitions to adulthood? | Count of youth exiting to emancipation; share enrolled in transitional support program AND Share of youth aging out by race/ethnicity | |

| Outcomes | 12. Are we keeping children safe while in out-of-home care? | Count of substantiated incidents of maltreatment in care by placement type |

| 13. Are we supporting children to achieve permanency in a timely way? | Share of children achieving permanency within 6 months, 12 months, 24 months of entering care | |

| 14. Are we successfully reunifying children with their families? | Share of children exiting care to reunification, adoption/guardianship, or emancipation | |

| 15. Are we reducing re-entry into care? (Are reunified families staying together?) | Of all children exiting care 12 months ago, share that re-entered within 1 month, 6 months, 12 months |

1. For example, a common measure of maltreatment in care is calculated as a ratio of the number of substantiated allegations divided by the sum of days in foster care across all children in foster care during the time period, multiplied by 1,000. The resulting measure, “Maltreatment in care per 1,000 foster care days,” is problematic as a tool for agency management: (1) it is difficult to estimate the risk of maltreatment in care for an individual child, (2) the presentation does not include any information that could help uncover which children are most at risk, such as those in certain types of placements, and (3) the formulation as a ratio can make it appear the system is improving when in fact trends might be driven by more children spending time in foster care. A better measure for agency leaders to regularly review is the monthly count of substantiated incidents of maltreatment in care disaggregated by placement type.

*The Government Performance Lab (GPL) at the Harvard Kennedy School of Government conducts research on how governments can improve the results they achieve for their citizens. An important part of this research model involves providing hands-on technical assistance to state and local governments. Through this involvement, the GPL gains insights into the barriers that governments face and the solutions that can overcome these barriers. By engaging current students and recent graduates in this effort, the GPL is able to provide experiential learning as well. The GPL wishes to acknowledge that these materials are made possible by grants and support from Casey Family Programs and the Laura and John Arnold Foundation. For more information about the Government Performance Lab, please visit our website at https://govlab.hks.harvard.edu